When I run the following code:

from nnsight import LanguageModel

import torch

model = LanguageModel("bigscience/bloom-7b1", dispatch='True')

with torch.no_grad():

with model.trace() as tracer:

with tracer.invoke('asdjkgsdjgsdkgjs') as invoker:

a = model.transformer.h[0].mlp.dense_h_to_4h.output.save()

b = model.transformer.h[0].mlp.gelu_impl.output.save()

c = model.transformer.h[0].mlp.dense_4h_to_h.output.save()

d = model.transformer.h[0].mlp.output.save()

print(torch.equal(a, b))

print(torch.equal(a, c))

print(torch.equal(a, d))

print(torch.equal(b, c))

print(torch.equal(b, d))

print(torch.equal(c, d))

Output:

False

False

False

False

False

False

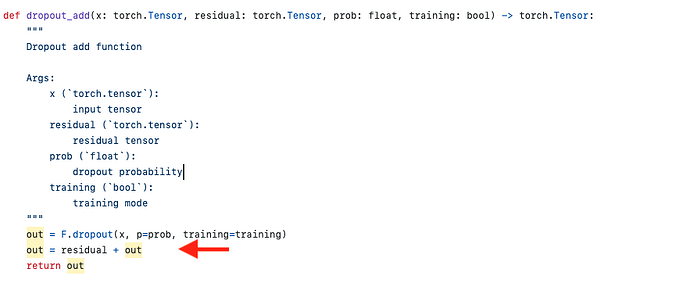

None of the outputs of the MLP (variables a, b, and c) correspond to the value I get with model.transformer.h[0].mlp.output.save() (variable d).

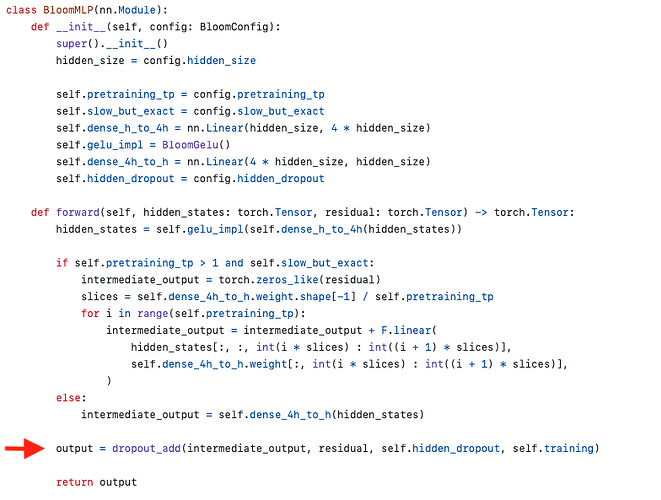

The MLP for “bigscience/bloom-7b1” is defined as:

(mlp): BloomMLP(

(dense_h_to_4h): Linear(in_features=4096, out_features=16384, bias=True)

(gelu_impl): BloomGelu()

(dense_4h_to_h): Linear(in_features=16384, out_features=4096, bias=True)

)

What does mlp.output correspond to in this case?